As a 21st-century business, you’re likely relying on data to make key financial and operational decisions. However, to get valuable insights, your data science teams often need to pull data from different locations, including your CRM, ERP, SaaS platforms, and other internal databases.

While this process may be business-as-usual for smaller organizations, it's not practical for data teams to manually manage data as your business grows. As organizations scale, so does the data and its complexity.

With more data sources, there will be more data pipelines. Your data scientists will likely have a hard time handling the increasing number of pipelines and ensuring that all the data and resulting insights are accurate and up-to-date. While most businesses use Workflow Management Systems (WMS) to handle creating and scheduling jobs, most older WMS are inefficient and difficult to scale.

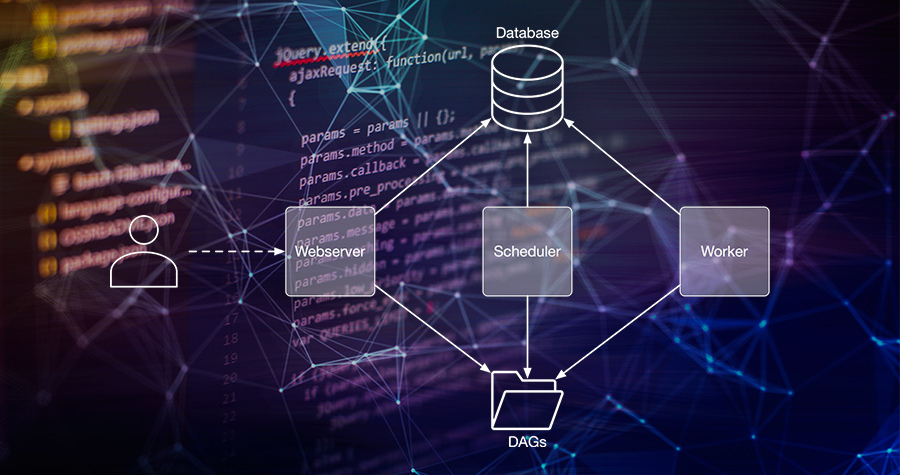

Airbnb was recently faced with a similar challenge. Since they couldn't find a software program to help them handle their increasing data pipelines, they created Airflow. In 2017, this tool became a part of Apache’s open-source software stack. Apache Airflow is much more than another WMS. It is a platform that enables your data science teams to programmatically author, schedule, and monitor workflows.

Before we dive into what Apache Airflow is used for, it's important to understand the importance of building data pipelines in a data-driven enterprise.

What is a Data Pipeline?

Data pipelines move your raw data from a source to a destination. The source could be your internal database, CRM, ERP or any other tool used by your teams to store key business information. A destination is usually a data lake or a data warehouse where data from the source is analyzed to generate business insights. As your data travels from the source to the destination, it goes through several transformation logic steps to prepare the raw data for analysis.

Building data pipelines is essential for enterprises that depend on data for decision-making. Your team members likely utilize several applications to perform different business functions. Manually collecting data from each app for analysis can lead to errors and data redundancy. Data pipelines eliminate these issues by connecting all your disparate data sources into one destination so that you can quickly run analyses to generate valuable insights.

Why Apache Airflow?

Apache Airflow acts like a WMS, but it offers many additional benefits to organizations with extensive data pipelines.

Programmable Workflow Management

In Airflow, workflows are defined as code. As a result, they become easy to maintain with better version control. With its code-first strategy, Airflow fosters collaboration to build better data pipelines and facilitates testing to ensure the workflow runs smoothly.

Extensible

Another benefit of building data pipelines with Apache Airflow is that it is fully extendable, enabling you to customize operators with multiple databases, cloud services, and internal apps. Due to its popularity, Airflow also benefits from a large amount of community-contributed operators enabling you to pull them for your specific workflow requirements directly.

Scalable

With its Rest API, Airflow is also scalable. This enables your data science teams to create custom workflows from external sources and output valuable insights for you quickly.

Automation Capability

Since the workflow can be configured as a code, it opens up automation opportunities to help your data engineers simplify repetitive aspects of building pipelines. For example, pipeline teams can automate a workflow to download data from an API, upload the data to the database, generate reports, and email these reports.

Easy Monitoring and Management

The best part about Airflow is its powerful UI, which allows your data engineers to manage and monitor workflows effectively. They can quickly access logs, track execution of workflows, and trigger reruns for failed tasks.

Alerting System

When tasks fail, Airflow sends an email by default. You can also set alert notifications through a tool like Slack, allowing your team to respond more quickly to critical failures.

Benefits of Airflow for Larger Organizations

In small organizations, workflows are fairly straightforward and can usually be managed by a single data scientist. Larger enterprises, however, need an infrastructure team to keep the numerous data pipelines operational throughout the organization.

Due to large and complex data sets distributed across multiple sources, data teams must regularly inspect the pipelines to ensure data quality and identify and fix errors.

Airflow gives engineers the tools to help them easily connect disparate data sources, schedule efficient workflows, and monitor these workflows through an easy-to-use web-based UI.

Apache Airflow Compared to Other Automation Solutions

While it's possible to automate data pipelines using time-based schedulers like Crontab, things get complicated when there are multiple workflows with greater dependencies.

For example, if the dependency for input data is from a third party, your data teams will have to wait for the information to trigger pipelines. There may even be several other teams dependent on the input data to start their workflows within the larger pipeline.

Situations like these are common in large organizations, and schedulers like Cron cannot overcome these issues. Creating and maintaining relationships between tasks is complex and time-consuming when using Cron. With Airflow, these issues can easily be overcome with some simple Python script.

Cron also needs external support to log, track, and manage tasks. With Airflow, teams can easily track and monitor workflows as well as troubleshoot issues on the fly with its powerful UI.

How Airflow Automates?

In Airflow, workflows or pipelines are expressed as DAGs (Directed Acyclic Graphs). Each step of the DAG is defined as a specific task, and these tasks are programmable with Python. Further, these DAGs are defined by necessary operators as well as relationships and dependencies between them using Python code. The sequence of these tasks can be triggered and automated by an event or a schedule.

For example, consider a simple workflow that involves collecting real-time data on your website’s landing page visits for marketing teams, processing the data, feeding it to the machine learning model and later generating analysis. With Airflow, it is possible to automate these tasks so that your marketing teams can access real-time statistics on their analytics dashboard to perform attribution for marketing campaigns.

Let delaPlex Help You Build Better Data Pipelines with Apache Airflow

Apache Airflow is proving to be a powerful tool for organizations like Uber, Lyft, Netflix, and thousands of others, enabling them to extract value by managing Big Data quickly. The tool can also help streamline your reporting and analytics by efficiently managing your data pipelines. At delaPlex, our Apache Airflow experts can collaborate with your internal data science teams to make the most out of this open-source tool.

Want to learn more about how delaPlex can help you? Contact us today to start the conversation.